import pandas as pd

from scipy.stats import chi2_contingency

# Load the Titanic dataset

df = pd.read_csv("data/Titanic.csv")

# Create a contingency table for 'Gender' and 'Survived'

table = pd.crosstab(df['Gender'], df['Survived'])

# Perform the chi-squared test

statistics, p, degrees_of_freedom, expected = chi2_contingency(table)

# Print the chi-squared statistic rounded to 3 decimal placesprint(round(statistics, 3))

각 단계 설명:

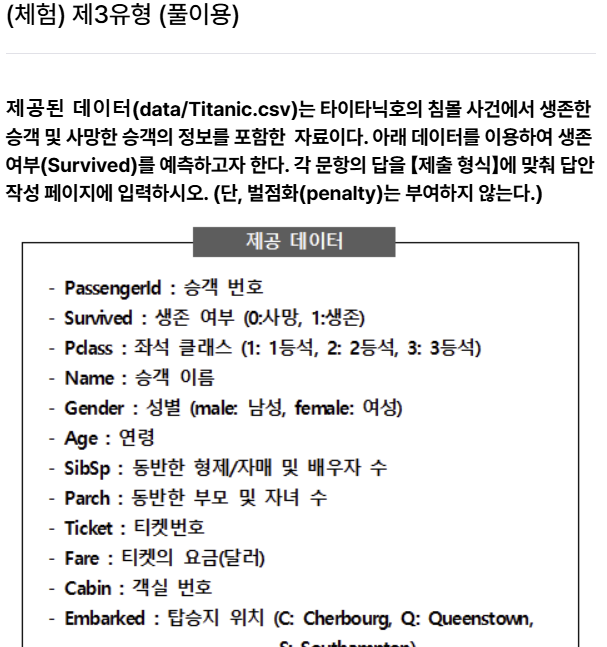

데이터 로드: pd.read_csv("data/Titanic.csv")는 Titanic 데이터 세트를 DataFrame으로 읽습니다.

비상사태 테이블 생성: pd.crosstab(df['Gender'], df['Survived'])는 각 성별에 대한 생존자와 비생존자의 수를 보여주는 테이블을 생성합니다.

카이제곱 테스트: chi2_contingency(table)는 분할표에 대해 카이제곱 테스트를 수행합니다. 이 함수는 다음을 반환합니다.

통계: 카이제곱 검정 통계량,

p: p-값,

자유도: 자유도,

예상: 변수 사이에 연관성이 없는 경우 예상 빈도표입니다.

인쇄: print(round(statistics, 3))는 테스트 통계를 소수점 이하 3자리로 반올림하여 출력

from statsmodels.api import Logit

df['Gender'] = df['Gender'].map({'male': 0, 'female': 1})

x = df[['Gender','SibSp','Parch','Fare']]

y = df['Survived']

model =Logit(y, x)

result = model.fit()

print(result.summary())

| Optimization terminated successfully. Current function value: 0.610661 Iterations 6 Logit Regression Results ============================================================================== Dep. Variable: Survived No. Observations: 891 Model: Logit Df Residuals: 887 Method: MLE Df Model: 3 Date: Sun, 10 Nov 2024 Pseudo R-squ.: 0.08297 Time: 14:33:06 Log-Likelihood: -544.10 converged: True LL-Null: -593.33 Covariance Type: nonrobust LLR p-value: 3.335e-21 ============================================================================== coef std err z P>|z| [0.025 0.975] ------------------------------------------------------------------------------ Gender 1.6051 0.169 9.522 0.000 1.275 1.935 SibSp -0.5659 0.102 -5.562 0.000 -0.765 -0.367 Parch -0.2331 0.105 -2.224 0.026 -0.438 -0.028 Fare 0.0013 0.002 0.892 0.373 -0.002 0.004 ============================================================================== 프로세스가 종료되었습니다. |

| v1.0 | v2.0 |

| import statsmodels.api as sm df['Gender'] = df['Gender'].map({'male': 0, 'female': 1}) # Select the predictor variables x = df[['Gender', 'SibSp', 'Parch', 'Fare']] # Select the target variable y = df['Survived'] # Add a constant to the predictor variables (for the intercept term in logistic regression) x = sm.add_constant(x) # Fit the logistic regression model model = sm.Logit(y, x) result = model.fit() # Print the summary of the model print(result.summary()) |

import statsmodels.api as sm from sklearn.preprocessing import LabelEncoder encoder = LabelEncoder() df['Gender'] = encoder.fit_transform(df['Gender']) x = df[['Gender','SibSp','Parch','Fare']] y = df['Survived'] x = sm.add_constant(x) model = sm.Logit(y, x) result = model.fit() print(result.summary()) |

#문제 2번

이 코드는 'statsmodels'를 사용하여 로지스틱 회귀를 수행하여 특정 변수가 생존 확률에 미치는 영향을 분석합니다

(Titanic 데이터 세트 변수 사용). 각 부분에 대한 분석은 다음과 같습니다.

import statsmodels.api as sm

from sklearn.preprocessing import LabelEncoder

# Encode 'Gender' as numeric

encoder = LabelEncoder()

df['Gender'] = encoder.fit_transform(df['Gender'])

라이브러리 가져오기: 'statsmodels'는 로지스틱 회귀 모델에 사용되고 'sklearn'의 'LabelEncoder'는 범주형 변수를 숫자 형식으로 변환하는 데 사용됩니다.

인코딩 성별: LabelEncoder는 범주형 성별 열(예: "남성", "여성")을 숫자 값(예: 0 및 1)으로 변환합니다. 로지스틱 회귀에는 수치 입력이 필요하므로 이는 필수적입니다.

x = df[['Gender', 'SibSp', 'Parch', 'Fare']]

y = df['Survived']

예측 변수(x) 선택: 코드는 열이 있는 DataFrame인 x를 생성합니다.

Gender: 0 또는 1로 인코딩됩니다.

SibSp: 탑승한 형제자매/배우자의 수.

Parch: 탑승한 부모/자녀 수.

운임: 여객운임.

타겟 변수(y) 선택: 타겟 변수 y를 Survived로 설정하여 승객이 생존했는지(1), 그렇지 않았는지(0)를 나타냅니다.

x = sm.add_constant(x)

상수 추가(절편): sm.add_constant(x)는 x에 절편 항을 추가하여 로지스틱 회귀가 모델에 상수를 포함할 수 있도록 합니다.

model = sm.Logit(y, x)

result = model.fit()

로지스틱 회귀 모델 생성 및 적합:

model = sm.Logit(y, x)는 y를 종속 변수로, x를 독립 변수로 사용하여 로지스틱 회귀 모델을 초기화합니다.

result = model.fit()은 모델을 데이터에 맞춰 각 예측 변수에 대한 계수를 추정합니다.

print(result.summary())

모델 요약 인쇄: result.summary()는 다음을 포함하는 자세한 요약을 제공합니다.

생존 확률에 미치는 영향을 나타내는 각 예측 변수의 계수입니다.

가설 검정을 위한 p-값.

모델 성능을 평가하기 위한 로그 우도, 유사 R-제곱 등과 같은 적합도 측정항목.

이 로지스틱 회귀 모델을 사용하면 각 변수(Gender, SibSp, Parch, Fare)가 타이타닉호의 생존 확률에 어떻게 영향을 미치는지 이해할 수 있습니다.

Optimization terminated successfully.

Current function value: 0.482065

Iterations 6

Logit Regression Results

==============================================================================

Dep. Variable: Survived No. Observations: 891

Model: Logit Df Residuals: 886

Method: MLE Df Model: 4

Date: Sun, 10 Nov 2024 Pseudo R-squ.: 0.2761

Time: 14:39:08 Log-Likelihood: -429.52

converged: True LL-Null: -593.33

Covariance Type: nonrobust LLR p-value: 1.192e-69

==============================================================================

coef std err z P>|z| [0.025 0.975]

------------------------------------------------------------------------------

const 0.9466 0.169 5.590 0.000 0.615 1.279

Gender -2.6422 0.186 -14.197 0.000 -3.007 -2.277

SibSp -0.3539 0.098 -3.604 0.000 -0.546 -0.161

Parch -0.2007 0.112 -1.792 0.073 -0.420 0.019

Fare 0.0147 0.003 5.553 0.000 0.010 0.020

==============================================================================

import statsmodels.api as sm

from sklearn.preprocessing import LabelEncoder

encoder = LabelEncoder()

df['Gender'] = encoder.fit_transform(df['Gender'])

formula = "Survived ~ Gender + SibSp + Parch + Fare"

result = sm.Logit.from_formula(formula, df).fit()

print(result.summary())

#문제 3번

import numpy as np

print(round(np.exp(result.params['SibSp']),3))

print(round(np.exp(-0.3539),3))

0.702

0.702

'도전기 > 빅분기' 카테고리의 다른 글

| 008_빅분기 테스트환경 외부데이터 활용 (0) | 2024.11.24 |

|---|---|

| 009_빅분기 실기 시험 환경 업데이트 정보 (0) | 2024.11.24 |

| 002_제2유형_구름 기반 실습 (0) | 2024.11.11 |

| 001_1유형_test (0) | 2024.10.09 |

| 002_빅데이터분석기사 실기_단기간_합격_준비 (1) | 2024.10.02 |